Using AI Confidence Thresholds for Automation in Rossum

The confidence score indicates the extent to which the AI engine is confident that it got the text and the location of the field correctly. Confidence scores may range from 0 to 1.

When starting with automation, we offer an option to automate the processing of fields where the AI engine confidence score is higher than a certain threshold.

By default, when you enable "Confident" automation, all data fields captured in the selected queue have a confidence threshold of 0.975. This threshold gives the AI a confidence bar to pass to allow the automatic export of data.

Setting the bar this high allows for very little, if any, automation by default. It also ensures that the document will stop for manual review and confirmation unless the AI is extremely confident in every single data field it captures.

But what does this threshold mean numerically? We calibrate the confidence scores to correspond to the probability of a given value being right. This means that the 0.975 threshold expresses a requirement for 97.5% accuracy, i.e., that documents sent to accounts payable software automatically should have a 2.5% error rate at maximum.

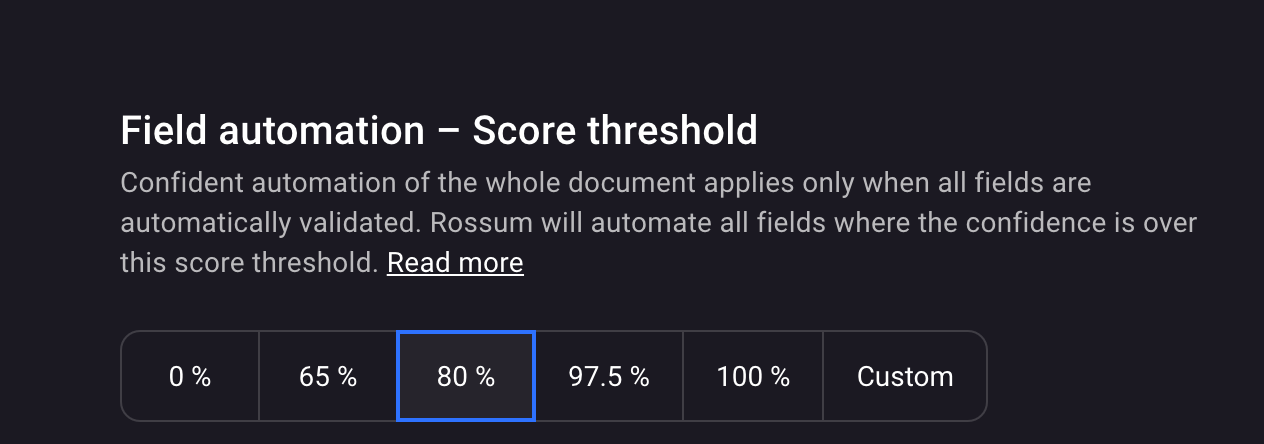

Setting confidence score in the app

You can set the confidence score threshold for a target queue in the app. This threshold will be applied to all the fields that are set to be extracted in this queue:

- Select the "Automation" tab.

- Pick the target queue.

- Select "Confident" automation.

- Scroll to the 'Field automation - Score threshold' part.

- Define the necessary confidence score threshold.

Updating confidence score threshold.

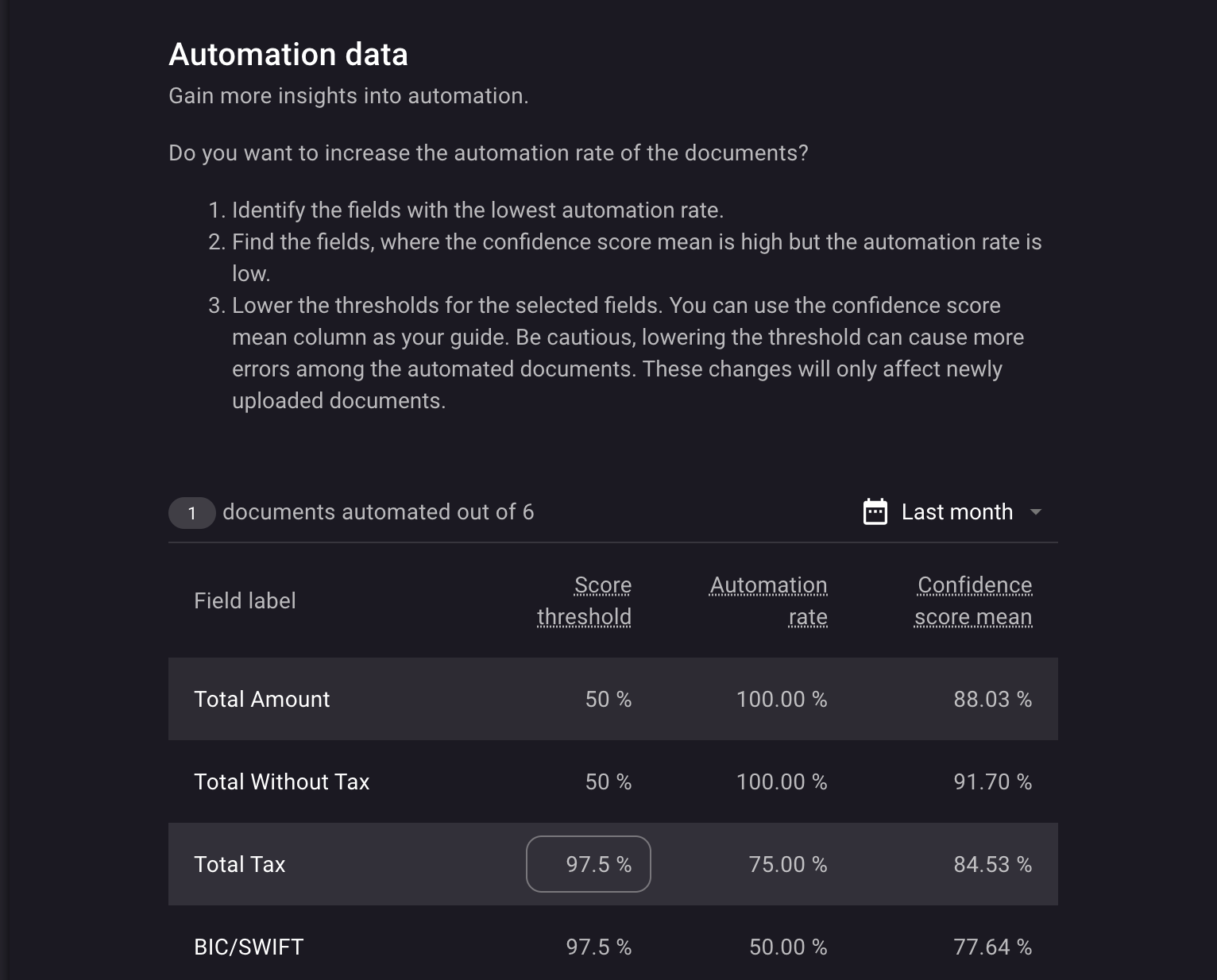

Different score thresholds among fields

There are situations where some fields might require different confidence score thresholds. This might be needed when you are not particularly concerned about the error rate for a specific field. Per-field confidence score threshold can be set in the extraction schema.

Follow the steps below to achieve this goal:

- Select the "Automation" tab.

- Pick the target queue.

- Select "Confident" automation.

- Scroll to the "Automation data" section.

- Update the score threshold for the fields in the "Automation data" table.

Setting up confidence score threshold for a specific field.

Note: The extraction schema is recommended as the place to change the threshold. You can use the automation data table to change the threshold based on insights.

How does this help to automate the documents?

If some field's confidence score was higher than the score threshold, you should be seeing a "score" key in the datapoint's validation sources.

validation_sources:["score"]

Combining confidence scores with other automation componentsLearn how the different automation components interact together to automate the documents as well as how the automation works with hidden and required fields.

What is the correct threshold?

When looking for the perfect threshold, there is a trade-off between how many documents will stop for manual review and how many documents will be automatically exported with an error.

Having a low threshold might lead to a lot of automatically exported documents. However, a lot of them may contain errors. Conversely, having a high threshold would stop most of the documents for manual review, which may mean more manual labor.

From our experience, customers looking for a high level of automation will need a Dedicated AI Engine. In these cases, the Rossum AI team will provide an in-depth automation score threshold analysis and the necessary fine-tuning.

However, you can do your own analysis based on the confidence score thresholds and the corresponding error rate. Consider adjusting confidence thresholds to match your requirements and error rate tolerance and customizing the platform with custom business rules.

Confidence scores vs. accuracy estimatesAs mentioned above, the AI engine's confidence scores represent the accuracy estimates of any given extraction. Therefore, the confidence score threshold should ideally correspond to the desired average accuracy level.

In practice, estimating this accuracy correctly in all cases is still an unsolved problem in Artificial Intelligence. Our research team is working hard to improve the accuracy of our estimates. Nevertheless, we recommend you conduct experiments on your particular data set to ensure the confidence scores match your needs.

In general, you may find Rossum's AI engine a little pessimistic, with real accuracy higher than hinted by the typical scores, but this may vary field by field.

Updated 7 months ago